After publishing sqlite_async, a high-performance asynchronous interface for SQLite on Dart & Flutter, we wanted to compare its performance to other in-app databases for Flutter: sqflite, ObjectBox and Isar.

Summary

- With some exceptions, all the evaluated databases give similar performance.

- Using transactions & batching makes a much bigger difference in performance than which database is used.

- WAL mode in SQLite is great for performance of many smaller write transactions.

Methodology

- Benchmark tests were loosely based on old SQLite benchmarks that include 16 separate tests, of which 15 were used.

- Where available, two implementations of each database engine were tested:

- ~A “default” implementation where the focus is on ease of implementation rather than performance. Only very simple performance optimizations are applied here, for example: combining writes into a single operation.

- ~A "batched" implementation that aims to minimize the performance overhead of individual async operations. This may introduce some additional complexity to batch operations, but increases performance. Only performance optimizations readily available in the library's documentation or examples are considered. This is done by either using a batch API provided by the library, or running synchronous operations in a separate Isolate.

- Benchmark tests were run on iOS, Android and Linux.

- Benchmark tests were run 3 times on each platform and for each database engine implementation.

List of all benchmark tests used:

- Test 1: 1000 INSERTs

- Test 2: 25000 INSERTs in a transaction

- Test 3: 25000 INSERTs into an indexed table

- Test 4: 100 SELECTs without an index

- Test 5: 100 SELECTs on a string comparison

- Test 7: 5000 SELECTs with an index

- Test 8: 1000 UPDATEs without an index

- Test 9: 25000 UPDATEs with an index

- Test 10: 25000 text UPDATEs with an index

- Test 11: INSERTs from a SELECT

- Test 12: DELETE without an index

- Test 13: DELETE with an index

- Test 14: A big INSERT after a big DELETE

- Test 15: A big DELETE followed by many small INSERTs

- Test 16: Clear table

Notes

- Since the tests are based on those from SQLite, the operations used may favor SQLite in some cases. For example, copying data from one table to another (Test 11) is a native operation using a single query in SQLite, while this requires separately querying and inserting data in Isar and ObjectBox.

- Some differences from the SQLite benchmarks:

- ~Tables and indexes are pre-created, to better match typical usage, and to allow fair comparison with databases that don't support dynamic tables and indexes.

- ~Tables have an explicit integer primary key "id", auto-populated by the respective databases. This is not directly used in the tests.

- ~Clear instead of drop tables.

- ~The exact queries may be different, but the outcome should be roughly the same.

- On specific tests:

- ~Test 1: This does individual inserts — no batching or transactions. This is not recommended for large volumes, but does demonstrate the overhead per transaction. The WAL journal mode on SQLite gives it an advantage on this test.

- ~Test 6: Not present, since it only creates indexes, which are avoided in these benchmarks.

- ~Test 7: This tests a "between" operation on an indexed column (WHERE b >= ? AND b < ?). This is one test where ObjectBox does not perform as well as the others — it's possible that this operation does not currently use indexes in ObjectBox.

- Only asynchronous/non-blocking APIs are used, unless the code runs in a background Isolate. Synchronous calls have the ability to block the UI / introduce stutter, so we believe it best to avoid them completely.

- Benchmark limitations:

- ~The benchmark implementation does not measure UI performance during database operations yet. Despite the database operations being async, it may still block the UI Isolate in some cases.

- ~No UI yet — all results are logged to the console.

- ~The benchmark implementation does not test concurrent operations.

Database-specific Notes

sqlite_async

- sqlite_async is a performance-optimized modification of the sqlite3 package.

- Only async operations are directly supported.

- Uses WAL journal mode (library default). [.inline-code-snippet]PRAGMA wal_checkpoint(RESTART)[.inline-code-snippet] is run at the end of every test, to better allocate write time to the relevant test.

- Batched mode: Uses prepared statements internally.

sqflite

- Uses DELETE journal mode (library default).

- sqflite_ffi is used for all tests. This has much better performance than the default implementation, and is a simple change.

Isar

- Uses async operations.

ObjectBox

- ObjectBox does support async operations from version 2.0, but not in a transaction.

- For async transactions, everything in the transaction must run in a separate isolate. It doesn't cause any issues in these benchmarks, but would potentially complicate code in real-world applications.

- Since there is no way to run an async transaction without running in a separate Isolate, only one implementation is provided.

Comparing Flutter Database Performance On iOS

Test device: iPhone 7, iOS 15

The table below shows the average test results across 3 test runs for each database engine implementation. Cells show time taken to complete the test in milliseconds and are shaded dark green (best) to amber to dark red (worst) to show relative performance for each test.

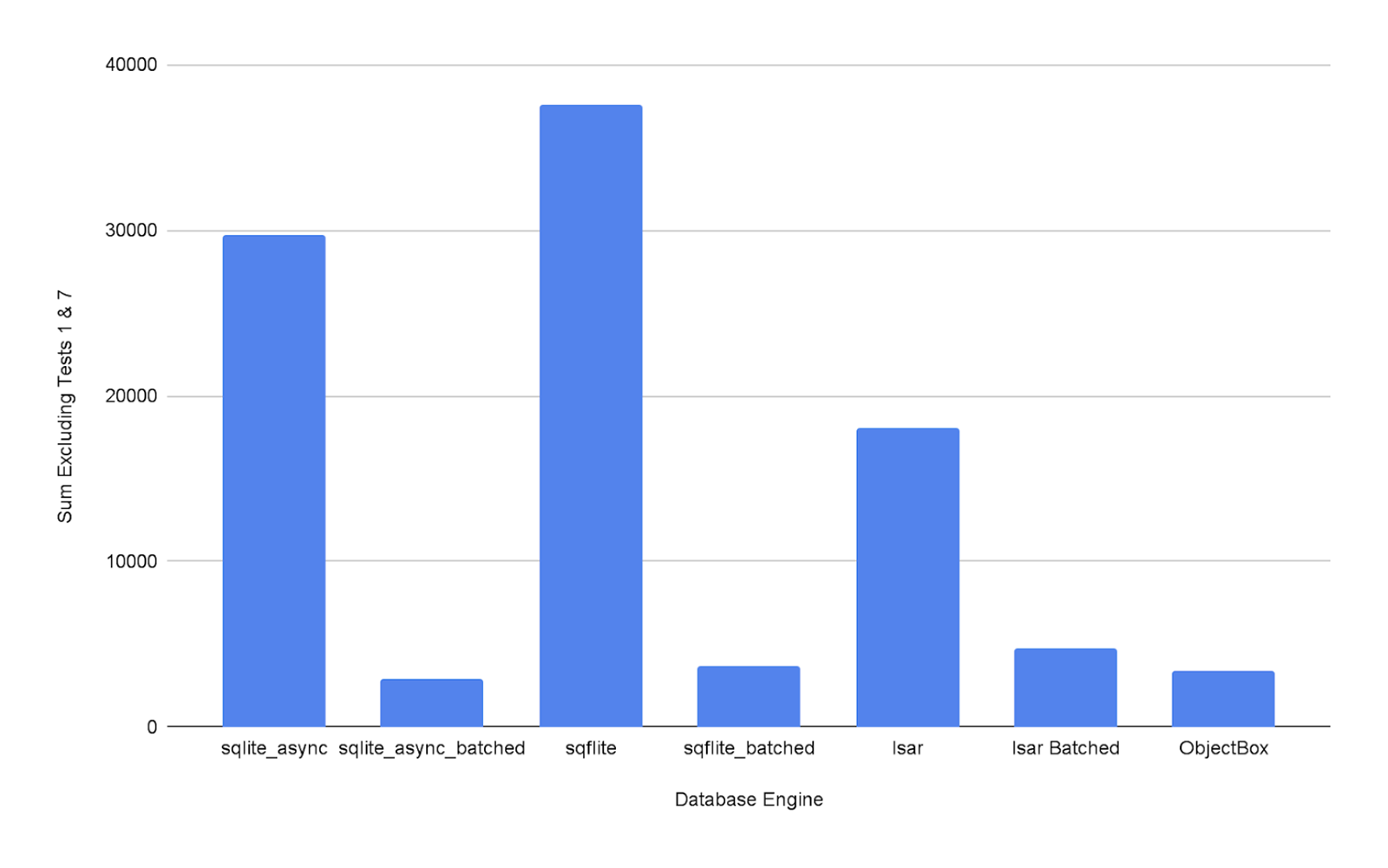

The graph below shows the sum of average test results for each database engine, ignoring tests 1 and 7 for their potential to skew the data: (As you can see, using transactions & batching makes a much bigger difference in performance than which database is used.)

Comparing Flutter Database Performance On Android

Test device: OnePlus Nord N10, Android 11

Here is a table showing average test results for different Flutter databases running on Android, set up as above:

Here is a similar graph as above, showing the sum of average test results, ignoring tests 1 and 7:

Comparing Flutter Database Performance On Linux

Test device: Dell XPS 13, Ubuntu 22.04

Here is a table showing average test results for different Flutter databases running on Linux, set up as above:

And here is the graph of the sum of average test results for Linux, also ignoring tests 1 and 7:

Benchmark GitHub Project

The benchmark project with full details including database files and acknowledgements is available here.