Introduction

In this guide we’ll showcase how to migrate an existing MongoDB Realm & Atlas Device Sync application to use PowerSync. This guide uses the PowerSync React Native SDK and we’ve based this guide on Realm’s %%rn-mbnb%% example app, which can be found here.

The complete source code for the migrated application can be found here.

Overview of Migration Steps

- PowerSync Service setup

- Configure Sync Rules

- Test your Sync Rules

- Backend API setup

- Client-side code migration

- Install PowerSync

- Remove Realm

- Migrate Realm schema

- Add PowerSync Backend Connector

- Set up PowerSync database and connect UI

- Accessing the database

- Migrate database queries

- Migrate render function

Migration Steps

PowerSync Service Setup

To get started with PowerSync, we recommend starting with the setup of a PowerSync Service instance. This guide uses PowerSync Cloud, but an alternative would be to spin up a self-hosted instance of PowerSync.

To create a PowerSync Cloud instance, follow these steps:

- Sign up for free for PowerSync if you’ve not done so already.

- Create a new project in the PowerSync Dashboard

- Create a new PowerSync instance in the project

- Enter the MongoDB connection details

- Before you save and deploy, hit ‘Test connection’

- See our documentation for required permissions if the connection is failing

- Once the connection has been established, select ‘Save and deploy’

Configuring Sync Rules

PowerSync allows configuring Sync Rules that specify which MongoDB data to sync with which users. Sync Rules allow for dynamic filtering and user-specific data partitioning to ensure that only the relevant data subsets are synced to individual app users.

Copy these sample Sync Rules into your %%sync-rules.yaml%% file in the PowerSync Dashboard:

bucket_definitions:

global:

data:

- SELECT _id as id, * from "listingsAndReviews"Note: Since MongoDB uses %%_id%% for the ID of a document whereas PowerSync uses %%id%% on the client-side, we need to %%select _id as id%% in the Sync Rules to extract the %%_id%% field and use it as the id of the %%listingsAndReviews%% table which will be exposed by PowerSync.

In this example we’re creating a “global bucket” to sync all %%listingsAndReview%% documents. In production apps, global app data should be added to such a “global bucket”, whereas user-specific data should be placed into appropriate separate buckets. Buckets can be thought of as logical groupings of data that the user needs on their device for the app to function.

Once you’ve created the Sync Rules, go ahead and deploy them in the PowerSync Dashboard.

Test Your Sync Rules

A quick and easy way to test and simulate your Sync Rules is to use the PowerSync Diagnostics app. To do so, you’ll need a development token (a temporary authentication token that can be used during development). Take the following steps:

- Right click on the PowerSync instance in the PowerSync Dashboard

- Select ‘Generate development token’

- Since we are only using a single Global Bucket for now, you can enter anything into the ‘Token Subject’ popup

- Hit ‘Continue’ and copy the development token

- Open diagnostics-app.powersync.com

- Paste in the development token

- The Endpoint URL will auto-populate because it’s embedded in the development token

- The Endpoint URL will auto-populate because it’s embedded in the development token

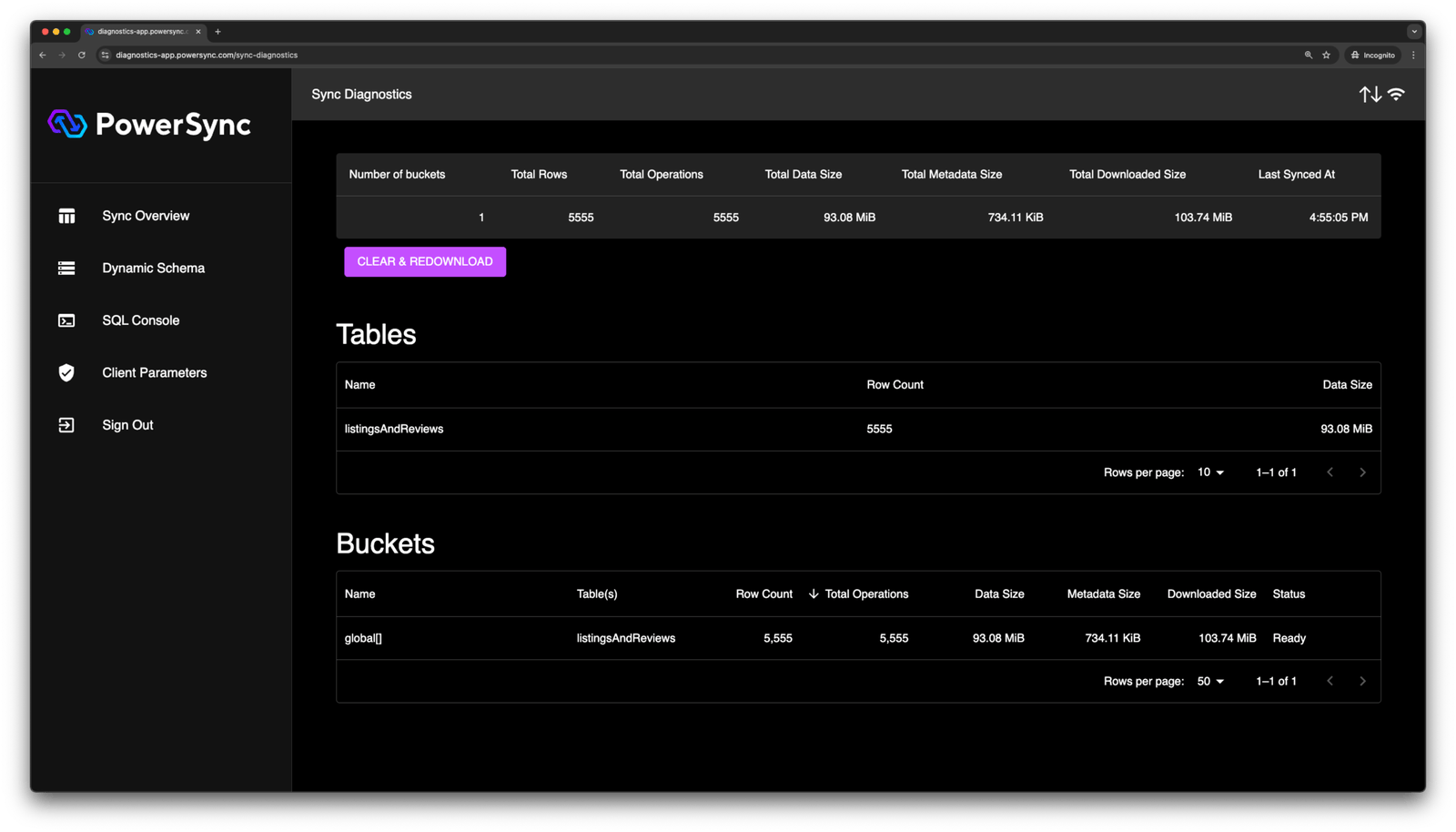

Once you’ve logged in, you should see something like this:

The Diagnostics app is very useful when it comes to debugging and quickly validating Sync Rules. There is also a SQL Console that you can use to query data in the client-side SQLite database provided by PowerSync to get a feel for how PowerSync works.

More About Sync Rules

Our example Sync Rules above are pretty straightforward. What if we want to do something more advanced, such as syncing specific data to the user? Let’s say we want to sync listings for a specific country. We could do the following:

bucket_definitions:

by_country:

parameters: SELECT request.jwt() ->> 'country' as country

data:

- SELECT _id as id, * FROM "listingsAndReviews" WHERE address ->> 'country' = bucket.countryIn the above example, we create a parameter query which creates a bucket for the country that matches the result of the parameter query. We assume that the signed in user’s JWT contains a `country` property in the payload. To extract values from the JWT payload, we can use the JSON operator e.g. %%request.jwt() ->> 'country'%%. If the %%country%% field was in a nested JSON object, let’s say %%address%%, we can use the same JSON operator to query on %%address.country%%.

For more details on how to use Sync Rules in PowerSync, refer to the docs.

Backend API Setup

PowerSync stores all client-side data modifications in an upload queue in SQLite, and allows developers to control exactly how entries in this queue are written back to MongoDB, by uploading modifications to the developer’s own backend API. (For a detailed overview of the overall PowerSync architecture, see our docs.)

We’ll illustrate what that would entail through a stub Node.js backend API implementation that uses Express. This API will receive uploads from the client.

If there are local modifications present in the upload queue, the PowerSync client SDK will automatically connect to the configured backend API to upload the modifications sequentially. The Realm demo %%rn-mbnb%% that we based this guide on does not write data back to the source MongoDB database. However, we’ve included this functionality in this guide to demonstrate how it can be implemented, as client applications often need to sync local changes back to the MongoDB database.

First let’s create a file called %%mongoPersistence.ts%%. This will focus on the MongoDB client connection and the operations that need to be written to the source MongoDB database:

import {Db, MongoClient, ObjectId} from "mongodb";

export type UpdateEvent = {

table: string;

data: any;

}

export default class MongoPersistence {

private client: MongoClient;

private database: Db;

constructor(config: { name: string, uri: string }) {

this.client = new MongoClient(`${config.uri}`);

this.database = this.client.db(config.name);

}

async init () {

await this.client.connect();

}

async update (updateEvent: UpdateEvent): Promise<void> {

try {

console.log(updateEvent);

const filter = { _id: new ObjectId(updateEvent.data.id) };

const collection = this.database.collection(updateEvent.table);

const updateDoc = {

$set: { ...updateEvent.data }

};

const result = await collection.updateOne(filter, updateDoc);

console.log(result);

} catch (error) {

console.log(error);

throw error;

} finally {

await this.client.close();

}

}

async upsert (updateEvent: UpdateEvent): Promise<void> {

try {

console.log(updateEvent);

const filter = { _id: new ObjectId(updateEvent.data.id) };

const collection = this.database.collection(updateEvent.table);

const updateDoc = {

$set: { ...updateEvent.data }

};

const options = {

upsert: true,

};

const result = await collection.updateOne(filter, updateDoc, options);

console.log(result);

} catch (error) {

console.log(error);

throw error;

} finally {

await this.client.close();

}

}

async delete (updateEvent: UpdateEvent): Promise<void> {

try {

console.log(updateEvent);

const filter = { _id: new ObjectId(updateEvent.data.id) };

const collection = this.database.collection(updateEvent.table);

const result = await collection.deleteOne(filter);

console.log(result);

} catch (error) {

console.log(error);

throw error;

} finally {

await this.client.close();

}

}

}This class will initialize and connect to MongoDB using the Node.js %%MongoClient%% driver. There are three different functions that will write changes to MongoDB depending on the type of change that occurred on the client (%%PUT%%, %%PATCH%%, %%DELETE%% correspond to types of client-side changes tracked by the PowerSync client SDK).

Next, we have our API layer which exposes endpoints to accept uploads from client applications. It will use the %%MongoPersistence%% class above to process the changes:

import {Router, Request, Response} from "express";

import config from "../../config";

import MongoPersistence from "../mongo/mongoPersistence";

export default class DataController {

public router: Router;

constructor() {

this.router = Router();

this.initRoutes();

}

private initRoutes() {

this.router.patch("/", this.update);

this.router.put("/", this.put);

this.router.delete("/", this.delete);

}

private async update(req: Request, res: Response) {

try {

if (!req.body) {

res.status(400).send({

message: 'Invalid body provided'

});

return;

}

const mongoPersistence = new MongoPersistence({

name: config.database.database,

uri: config.database.uri

});

await mongoPersistence.init();

await mongoPersistence.update(req.body);

res.status(200).send();

} catch (err) {

console.log(err);

res.status(500).send({

message: err,

});

}

}

private async put(req: Request, res: Response) {

try {

if (!req.body) {

res.status(400).send({

message: 'Invalid body provided'

});

return;

}

const mongoPersistence = new MongoPersistence({

name: config.database.database,

uri: config.database.uri

});

await mongoPersistence.init();

await mongoPersistence.upsert(req.body);

res.status(200).send();

} catch (err) {

res.status(500).send({

message: err,

});

}

}

private async delete(req: Request, res: Response) {

try {

if (!req.body) {

res.status(400).send({

message: 'Invalid body provided'

});

return;

}

const mongoPersistence = new MongoPersistence({

name: config.database.database,

uri: config.database.uri

});

await mongoPersistence.init();

await mongoPersistence.delete(req.body);

res.status(200).send();

} catch (err) {

res.status(500).send({

message: err,

});

}

}

}Client-Side Code Migration

Install PowerSync Dependencies

To get started in the client-side app code, we need to set up dependencies, so go ahead and install the following:

npm install @powersync/react @powersync/react-native @journeyapps/react-native-quick-sqlite @azure/core-asynciterator-polyfillHere’s a quick rundown of these packages:

- %%@powersync/react%%: This package provides React hooks for use with the PowerSync JavaScript Web SDK or React Native SDK. These hooks are designed to support reactivity — they are used to automatically re-render React components when query results update. They can also be used to access PowerSync connectivity status changes.

- %%@powersync/react-native%%: This is the PowerSync SDK for React Native clients.

- %%@journeyapps/react-native-quick-sqlite%%: This is a PowerSync fork of the SQLite library react-native-quick-sqlite that includes custom SQLite extensions built specifically for PowerSync.

- %%@azure/core-asynciterator-polyfill%%: This library provides a polyfill for %%Symbol.asyncIterator%% for platforms that do not support it by default.

Remove Realm

Now with all of the required dependencies for PowerSync installed, we can go ahead and remove the Realm dependencies:

npm uninstall @realm/react realmWe can also remove all directories and files related to Realm:

- The %%backend%% directory

- %%sync.config.js%%

- %%AnonAuth.tsx%%

- %%localModels.tsx%%

- %%localRealm.tsx%%

- %%syncedModels.tsx%%

- %%syncedRealm.tsx%%

Migrate Realm Schema to PowerSync

It’s important to note that unlike Realm, PowerSync does not require separate local and “syncing” models or schemas. With PowerSync, you only need to define a single client-side schema.

Models/schemas in Realm are defined using objects of type %%Realm.ObjectSchema%%. Here is an example of that from the %%rn-mbnb%% repo:

import Realm from "realm";

export const listingsAndReviewSchema: Realm.ObjectSchema = {

name: "listingsAndReview",

properties: {

_id: "string",

access: "string?",

accommodates: "int?",

address: "listingsAndReview_address",

// ... snipped for brevity ...

security_deposit: "decimal128?",

space: "string?",

summary: "string?",

transit: "string?",

weekly_price: "decimal128?",

},

primaryKey: "_id",

};To migrate the schema above over to PowerSync, we’ll need to refactor a few things.

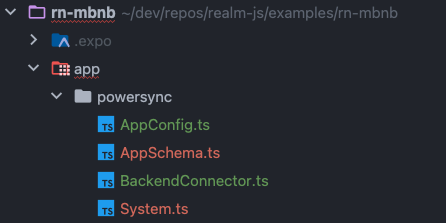

Generally it’s best to create a directory in your project for all the PowerSync-related classes and functions. Let’s create a new %%powersync%% directory. The directory structure would look something like this:

Now let’s create a new file called %%AppSchema.ts%% — i.e. %%powersync/AppSchema.ts%% and add the following code to migrate our Realm object schema to PowerSync. Since PowerSync uses SQLite as its client-side database, it makes use of three of SQLite’s supported data types: %%text%%, %%integer%% and %%real%%:

import {column, Schema, Table} from "@powersync/react-native";

const listingsAndReview = new Table({

access: column.text,

accommodates: column.integer,

address: column.text,

amenities: column.text,

availability: column.text,

bathrooms: column.integer,

bed_type: column.text,

bedrooms: column.integer,

beds: column.integer,

calendar_last_scraped: column.text,

cancellation_policy: column.text,

cleaning_fee: column.text,

description: column.text,

extra_people: column.integer,

first_review: column.text,

guests_included: column.integer,

host: column.text,

house_rules: column.text,

images: column.text,

interaction: column.text,

last_review: column.text,

last_scraped: column.text,

listing_url: column.text,

maximum_nights: column.text,

minimum_nights: column.text,

monthly_price: column.text,

name: column.text,

neighborhood_overview: column.text,

notes: column.text,

number_of_reviews: column.integer,

price: column.text,

property_type: column.text,

review_scores: column.text,

reviews: column.text,

room_type: column.text,

security_deposit: column.text,

space: column.text,

summary: column.text,

transit: column.text,

weekly_price: column.text

});

export const AppSchema = new Schema({

listingsAndReview

});

export type Database = (typeof AppSchema)["types"];

export type ListingsAndReview = Database['listingsAndReview'];Because we have a %%listingsAndReviews%% collection in MongoDB that we referenced in our Sync Rules above, we define a corresponding %%Table%% and specify all the columns that map to the fields of the objects in the MongoDB collection.

For nested objects, such as %%host%%, we map them to %%text%% in PowerSync and use JSON functions in the app to query, parse, and display the data in SQLite. We’ll cover this further down in this guide.

Some things to note:

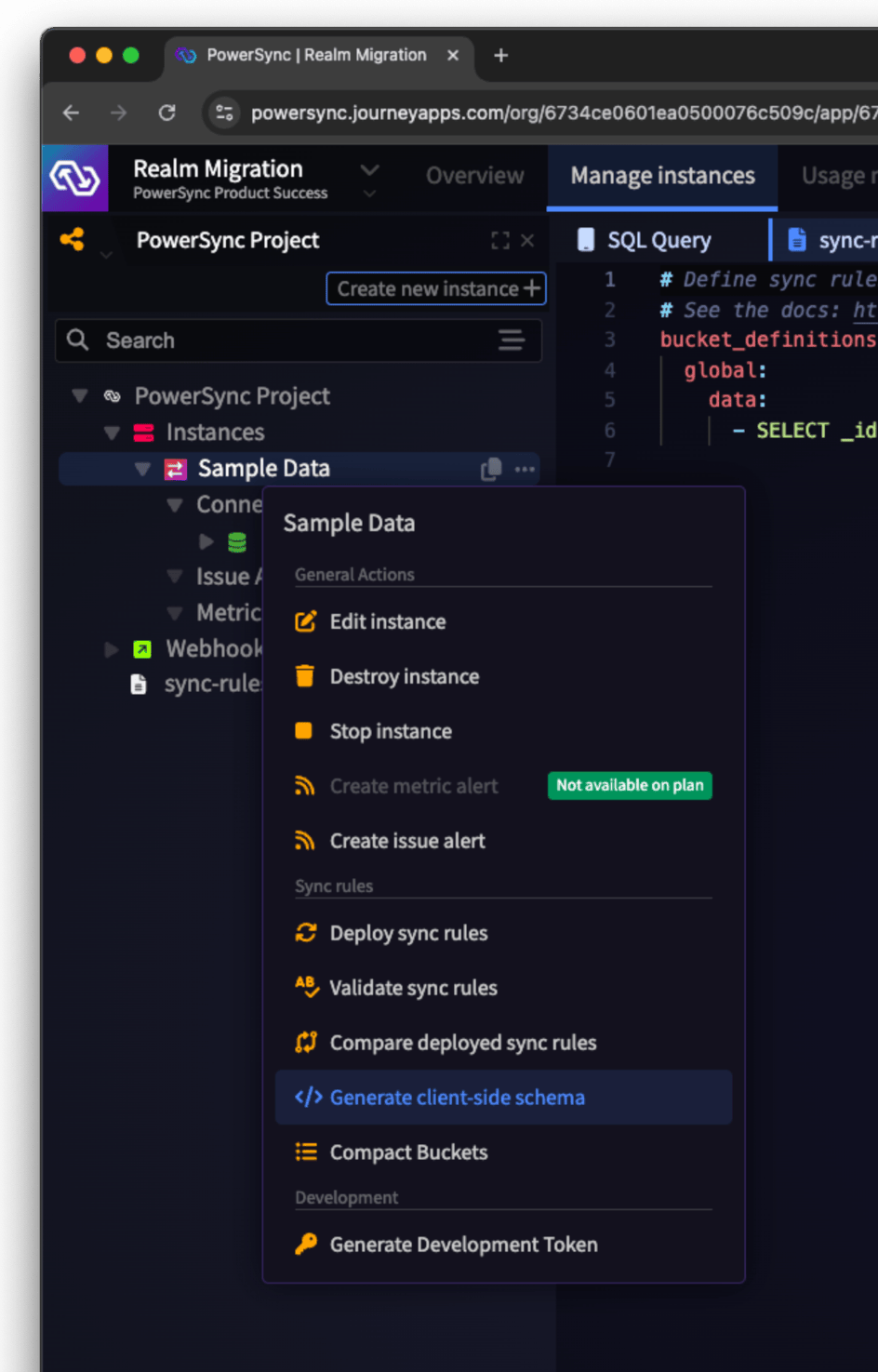

- If you’re using PowerSync Cloud, you can generate a client schema using the PowerSync Dashboard based on the Sync Rules you’ve defined for your application. Right-click on your instance and select “Generate client-side schema”.

- We don’t need to explicitly define an %%id%% column in our PowerSync client schema, as PowerSync will automatically create the %%id%% column. This column maps to the %%_id%% property of the MongoDB document, as we specified in our Sync Rules.

- PowerSync allows for indexes on columns in the SQLite database. This can be added as follows:

const listingsAndReview = new Table({

...

}, { indexes: { listingsAndReviews: ['name'] });- As noted above, Realm requires two different models to be defined — a local model (for local-only data) and a synced model (for data that needs to sync with the source MongoDB database). This is not the case with PowerSync and it’s somewhat simplified with PowerSync. To treat certain data as local-only with PowerSync (not synced back to source MongoDB database) you can create a %%Table%% and mark it as %%localOnly%%, e.g.:

const myLocalTable = new Table({

...

}, { localOnly: true });Now that we have the schema defined, let’s go ahead with migrating the rest of our app code to use PowerSync.

Add PowerSync Backend Connector

Before we instantiate the PowerSync database on the client-side, we first need to define a %%PowerSyncBackendConnector%% class that PowerSync uses for two things:

- %%fetchCredentials%%: A function that PowerSync calls to obtain a valid JWT to allow the client app to authenticate against the PowerSync Service. For the purposes of this guide, we’ll do anonymous authentication using a development token. In production, you would want to connect this function with your authentication provider (see the Authentication section in our docs for more details).

- %%uploadData%%: A function that PowerSync uses to process the upload queue. When changes to data are made in the client-side SQLite database, PowerSync queues up the changes and uses the %%uploadData%% function to send the updates to the source MongoDB database via the backend API that we defined previously.

Let’s define a backend connector in our %%powersync%% directory e.g. BackendConnector.ts:

import { AbstractPowerSyncDatabase, CrudEntry, PowerSyncBackendConnector, UpdateType } from '@powersync/react-native';

import { AppConfig } from './AppConfig';

import { ApiClient } from './ApiClient';

export class BackendConnector implements PowerSyncBackendConnector {

public apiClient: ApiClient;

constructor () {

this.apiClient = new ApiClient(AppConfig.backendUrl);

}

async fetchCredentials() {

return {

endpoint: AppConfig.powersyncUrl ?? '',

token: AppConfig.powerSyncDevelopmentToken ?? '',

};

}

async uploadData(database: AbstractPowerSyncDatabase): Promise<void> {

const transaction = await database.getNextCrudTransaction();

if (!transaction) {

return;

}

let lastOp: CrudEntry | null = null;

try {

for (const op of transaction.crud) {

lastOp = op;

const record = { table: op.table, data: { ...op.opData, id: op.id } };

switch (op.op) {

case UpdateType.PUT:

await this.apiClient.upsert(record);

break;

case UpdateType.PATCH:

await this.apiClient.update(record);

break;

case UpdateType.DELETE:

await this.apiClient.delete(record);

break;

}

await transaction.complete();

}

} catch (ex: any) {

console.debug(ex);

console.error("Data upload error - discarding:", lastOp, ex);

await transaction.complete();

}

}

}Some things to note:

- We are using a development token in the %%fetchCredentials%% function above. When preparing for production, the %%fetchCredentials%% function should retrieve the signed-in user’s JWT from local storage or via an additional API call in the function.

- Development tokens are not long-lived — they expire after 12 hours.

To wrap up the backend connector, we’re going to need an %%ApiClient%% class which will use %%fetch%% to send the changes to the source MongoDB database. This is where dedicated functions will exist that map to the endpoints we defined above in our example Node.js backend API:

export class ApiClient {

private readonly baseUrl: string;

private readonly headers: any;

constructor(baseUrl: string) {

this.baseUrl = baseUrl;

this.headers = {

'Content-Type': 'application/json'

};

}

async update(data: any): Promise<void> {

const response = await fetch(`${this.baseUrl}/upload_data/`, {

method: 'PATCH',

headers: this.headers,

body: JSON.stringify(data)

});

if (response.status !== 200) {

throw new Error(`Server returned HTTP ${response.status}`);

}

}

async upsert(data: any): Promise<void> {

const response = await fetch(`${this.baseUrl}/api/upload_data/`, {

method: 'PUT',

headers: this.headers,

body: JSON.stringify(data)

});

if (response.status !== 200) {

throw new Error(`Server returned HTTP ${response.status}`);

}

}

async delete(data: any): Promise<void> {

const response = await fetch(`${this.baseUrl}/api/upload_data/`, {

method: 'DELETE',

headers: this.headers,

body: JSON.stringify(data)

});

if (response.status !== 200) {

throw new Error(`Server returned HTTP ${response.status}`);

}

}

}Now that we have our %%BackendConnector%% defined, let’s add the last file named AppConfig.ts:

export const AppConfig = {

powerSyncDevelopmentToken: process.env.EXPO_PUBLIC_POWERSYNC_DEVELOPMENTAL_TOKEN || '',

powersyncUrl: process.env.EXPO_PUBLIC_POWERSYNC_URL,

backendUrl: process.env.EXPO_PUBLIC_BACKEND_URL,

};This will have three variables:

- %%powersyncUrl%%: This is the PowerSync Service instance URL that the client application will use to stream changes from.

- %%powerSyncDevelopmentToken%%: This is the development token we generated in the section earlier on. In production you would remove the development token — they are used only for testing purposes.

- %%backendUrl%%: This is the URL that points to the backend API and is used by the %%ApiClient%% to send changes to the source MongoDB database via the backend.

Set up PowerSync Database and Connect UI

In our %%powersync%% directory, let’s add a new file called %%System.ts%%. This will be used later on in our PowerSync Provider. Here we instantiate the backend connector and client-side PowerSync database, and create a %%useSystem%% hook that wraps React’s %%useContext%% to make these available as context to React components:

import '@azure/core-asynciterator-polyfill';

import React from 'react';

import { PowerSyncDatabase } from '@powersync/react-native';

import { BackendConnector } from './BackendConnector';

import { AppSchema } from './AppSchema';

export class System {

powersync: PowerSyncDatabase;

backendConnector: BackendConnector;

constructor() {

this.backendConnector = new BackendConnector();

this.powersync = new PowerSyncDatabase({

schema: AppSchema,

database: {

dbFilename: 'sqlite.db'

}

});

}

async init() {

await this.powersync.init();

await this.powersync.connect(this.backendConnector);

}

}

export const system = new System();

export const SystemContext = React.createContext(system);

export const useSystem = () => React.useContext(SystemContext);Some important things to note:

- We import %%@azure/core-asynciterator-polyfill%% because we’re going to be using watched queries, and this polyfill is required to do so.

- When instantiating the %%PowerSyncDatabase%%, we must specify the %%schema%% and the database options. The most important part here is the %%dbFilename%%.

- The %%init%% function on %%System%% calls the %%init()%% and %%connect()%% functions on the %%PowerSyncDatabase%% to start the sync process with PowerSync.

- The %%connect()%% function requires a %%BackendConnector%% instance, because the PowerSync database will call the %%fetchCredentials%% function when PowerSync tries to authenticate, and the %%uploadData%% function to process the upload queue and send writes back to the source MongoDB database via the API we defined earlier.

- The %%connect()%% function requires a %%BackendConnector%% instance, because the PowerSync database will call the %%fetchCredentials%% function when PowerSync tries to authenticate, and the %%uploadData%% function to process the upload queue and send writes back to the source MongoDB database via the API we defined earlier.

To use the provider, we need to update the existing %%AppWrapper.tsx%% file. Here’s what that file looks like with Realm, before migrating to PowerSync:

export const AppWrapper: React.FC<{

appId: string;

}> = ({ appId }) => {

return (

<>

<SafeAreaView style={styles.screen}>

<AppProvider id={appId}>

<UserProvider fallback={<AnonAuth />}>

<SyncedRealmProvider

shouldCompact={() => true}

sync={{

flexible: true,

onError: (_, error) => {

// Comment out to hide errors

console.error(error);

},

existingRealmFileBehavior: {

type: OpenRealmBehaviorType.OpenImmediately,

},

}}

>

<LocalRealmProvider>

<AirbnbList />

</LocalRealmProvider>

</SyncedRealmProvider>

</UserProvider>

</AppProvider>

</SafeAreaView>

</>

);

};You can see that the Realm implementation uses %%AppProvider%%, %%UserProvider%% and %%SyncedRealmProvider%%. The %%SyncedRealmProvider%% also requires some options to be set such as callback functions for error handling and other options.

To migrate this to use PowerSync, we need to update a few things, starting with removing the Realm providers and importing our newly-created %%System.ts%% file. Then we use our %%useSystem%% hook and call %%init()%% to initialize the client-side PowerSync database and connect to the PowerSync Service instance. We then memoize the %%system%% and pass that to our %%PowerSyncContext%% provider. Here is what %%AppWrapper.tsx%% would look like:

import { System, useSystem } from "./powersync/System";

export const AppWrapper: React.FC = () => {

const system: System = useSystem();

React.useEffect(() => {

system.init();

}, []);

const db = useMemo(() => {

return system.powersync;

}, []);

return (

<PowerSyncContext.Provider value={db}>

<SafeAreaView style={styles.screen}>

<AirbnbList />

</SafeAreaView>

</PowerSyncContext.Provider>

);

};As you can see above, %%AirbnbList%% is the component mainly used for the UI in the %%rn-mbnb%% app, and therefore that’s what we will focus on next.

Accessing the Database

In %%AirbnbList.tsx%% in the original %%rn-mbnb%% example app, you will notice that both ‘local’ and 'synced’ Realm databases are used, which are accessed using %%useLocalRealm%% and %%useSyncedRealm%% hooks:

import { useLocalQuery, useLocalRealm } from "./localRealm";

import { useSyncedQuery, useSyncedRealm } from "./syncedRealm";

export const AirbnbList = () => {

// ...

const localRealm = useLocalRealm();

const syncedRealm = useSyncedRealm();

// ...

}By contrast, PowerSync only has a single client-side database, which we set up in %%System.ts%% above and passed to our %%PowerSyncContext.Provider%%. We then access it with the %%usePowerSync%% hook from the %%@powersync/react%% package:

import { usePowerSync, useQuery } from "@powersync/react";

export const AirbnbList = () => {

const powersync = usePowerSync();

// ...

}Migrate Database Queries

In the Realm %%rn-mbnb%% repo, a search function using MongoDB Atlas Search Index was used to search for listings, however, for the purposes of demonstrating how one would migrate an app from Realm / Atlas Device Sync to PowerSync, we’ve opted to use standard SQLite queries with PowerSync in this guide. (PowerSync does offer advanced client-side search capabilities using the SQLite FTS5 extension — see our docs for more information.)

The Realm version of the %%rn-mbnb%% example app uses a search function in %%AirbnbList.tsx%% that checks a cache or Realm database for results: (note that in the %%rn-mbnb%% example app, the user can simulate offline or online mode, and this is what the %%offlineMode%% state variable refers to)

// Perform the search method based on the current state

const doSearch = async () => {

if (searchTerm !== "") {

// Check the cache first

if (cache.length > 0) {

const ids = cache[0].results.reduce((res, cur) => {

res.push(cur);

return res;

}, []);

setResultIds(ids);

setResultMethod(ResultMethod.Cache);

// If we are in offline mode, we will do a full text search on the current Realm

} else if (offlineMode) {

const localSearchResults = syncedRealm

.objects(ListingsAndReview)

.filtered("name TEXT $0", searchTerm);

const ids = localSearchResults.map((item) => item._id);

setResultIds(ids);

setResultMethod(ResultMethod.Local);

// If we are online, we will call the search function on the user object

} else {

const { result, error } = await user.functions.searchListings({

searchPhrase: searchTerm,

pageNumber: 1,

pageSize: 100,

});

if (error) {

console.error(error);

} else {

const ids = result.map((item) => item._id);

setResultIds(ids);

setResultMethod(ResultMethod.Remote);

// Cache the results

localRealm.write(() => {

localRealm.create(SearchCache, {

searchTerm: searchTerm.toLowerCase(),

results: ids,

});

});

}

}

} else {

setResultIds([]);

}

};In the PowerSync version of %%AirbnbList.tsx%%, we do away with the dedicated search function and update the search term based on user input, and then use the PowerSync %%useQuery%% hook to create a watched query against the SQLite database (a watched query will execute whenever the results of the query change):

const { data: records } = useQuery<ListingsAndReviewRecord>(`

SELECT * FROM ${LISTINGS_REVIEW_TABLE} WHERE name LIKE '%${searchTerm}%'

`);The SQLite query selects all the columns from %%listingsAndReviews%% and filters the results based on the pattern matching condition (using the %%LIKE%% operator). The pattern %%'%${searchTerm}%'%% means that the query will look for any entries in the %%name%% column that contain the value of %%${searchTerm}%% anywhere within the string.

In addition to the %%useQuery%% hook for watched queries, other APIs provided by PowerSync to execute queries include:

- %%powersync.get()%% — executes a read-only query and returns the first result

- %%powersync.execute()%% — executes a write (%%INSERT%%/%%UPDATE%%/%%DELETE%%) query

Migrate Render Functions

The %%renderListing%% function in the Realm version of %%AirbnbList.tsx%% uses FastImage, a component which improves the performance of React Native’s %%Image%% component by applying aggressive caching amongst other things:

const renderListing: ListRenderItem<ListingsAndReview> = useCallback(

({ item }) => (

<Pressable

onPress={() => {

alert(JSON.stringify(item.toJSON()));

}}

>

<View style={styles.listing}>

<FastImage

style={styles.image}

source={{

uri: item.images.picture_url,

priority: FastImage.priority.normal,

cache: FastImage.cacheControl.immutable,

}}

/>

<Text>{item.name}</Text>

</View>

</Pressable>

),

[]

);In the PowerSync version, we opted to simply use React Native’s %%Image%% component instead.

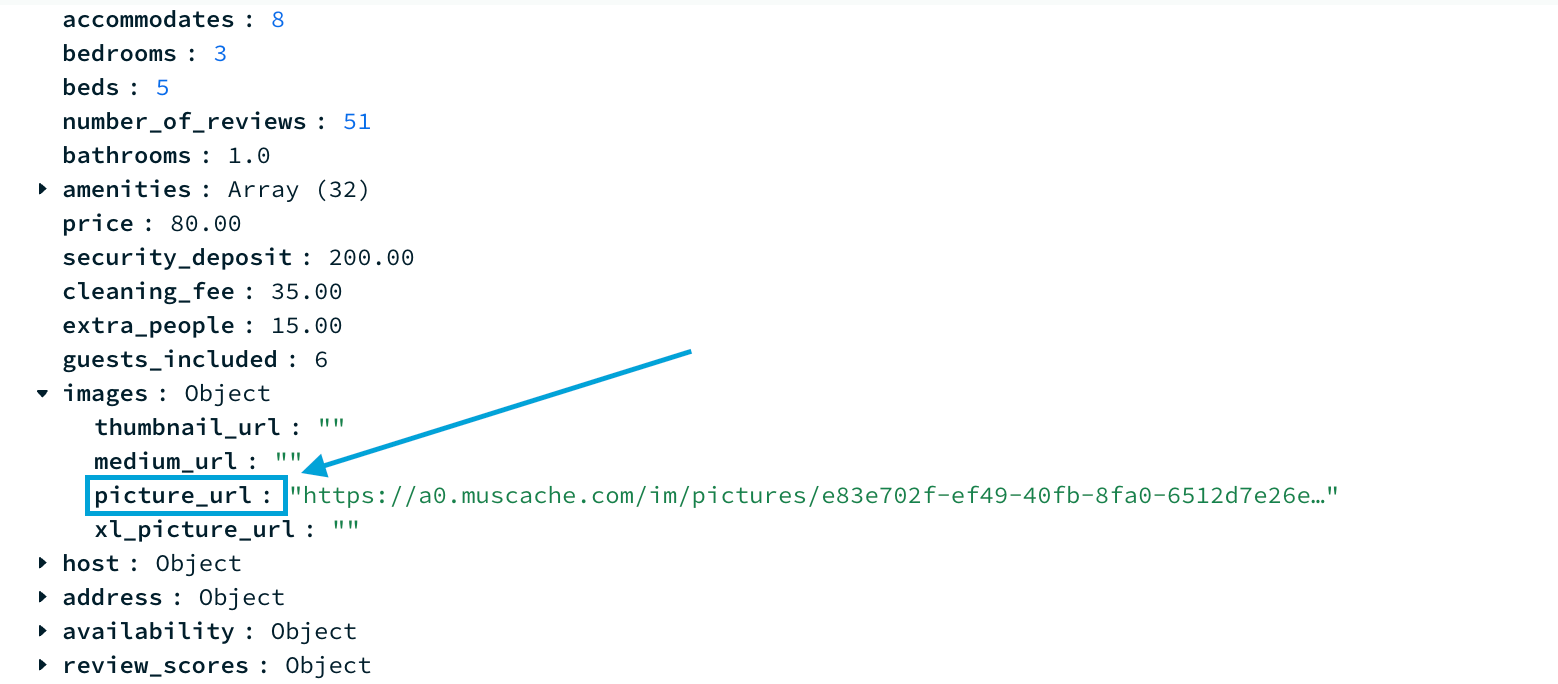

In the code snippet above, you will notice that %%picture_url%% is a nested field on %%images%% in the %%listingsAndReviews%% collection. Here is an example document from the demo dataset for the example app:

When we migrated the schema earlier in this guide, we noted that we mapped nested objects in MongoDB to %%text%% in the client-side SQLite database with PowerSync, so that we can work with them as JSON data.

Accordingly, there are a couple of ways to handle nested objects on the client with PowerSync:

Option 1: Extract nested field using SQLite %%json_extract%% function: If you want to keep the schema on the client as close as possible to the schema in the backend MongoDB database, this would be the preferred approach.

We can extract the %%picture_url%% from the %%images%% object using %%json_extract()%%. With this approach it’s important to note that the client-side schema does not contain a reference to the %%picture_url%% column — we only have the top-level %%images%% field in our schema which is of type %%text%% and will contain JSON. We therefore need to update the return type of %%useQuery%% to include the additional column: %%useQuery<ListingsAndReviewRecord & { picture_url: string }>%%

const { data: records } = useQuery<ListingsAndReviewRecord & { picture_url: string }>(`

SELECT *, json_extract(images, '$.picture_url') AS picture_url FROM ${LISTINGS_REVIEW_TABLE} WHERE name LIKE '%${searchTerm}%'

`);Option 2: Extract nested field in the Sync Rules: If the other data in the nested object is never used on the client, this approach would be appropriate.

To extract %%picture_url%% from the %%images%% document in our Sync Rules, we can do the following in %%sync-rules.yaml%%:

SELECT _id as id, images ->> 'picture_url' AS picture_url, * from "listingsAndReviews"We also need to update our client-side PowerSync schema accordingly (in %%AppSchema.ts%%):

const listingsAndReviews = new Table({

...

picture_url: column.text,

...

});To finish migrating the %%renderListing%% function, we can use React Native’s %%Image%% component and simply display the image URI contained in the %%picture_url%%:

const renderListing: ListRenderItem<ListingsAndReview> = useCallback(

({ item }) => (

<Pressable

onPress={() => {

alert(JSON.stringify(item));

}}

>

<View style={styles.listing}>

<Image

style={styles.image}

source={{uri: item.picture_url}}

/>

<Text>{item.name}</Text>

</View>

</Pressable>

),

[]

);Conclusion

Migrating from MongoDB Realm & Atlas Device Sync to PowerSync involves a bit of work but is straightforward as the high-level architecture is similar. On the client-side, SQLite replaces Realm as the in-app database. On the server-side, the PowerSync Service replaces Atlas Device Sync, with the notable difference that data uploads from the client app are sent to your own backend API and not back to the PowerSync Service. Flexible Sync Rules allow you to control which data each user should have local access to. We hope this guide helps you migrate your app to PowerSync with confidence!

Appendix: Further rn-mbnb Migration Notes

The following notes are specific to migrating the %%rn-mbnb%% example app and do not necessarily apply generally to any Realm / Atlas Device Sync to PowerSync migration. We have included them here to help developers compare the PowerSync implementation of the %%rn-mbnb%% example app with the original Realm implementation.

Effect Hooks

The Realm version does a few things using React %%useEffect%% hooks related to cache management and Realm subscriptions:

useEffect(() => {

const allIds = fullCache.reduce((acc, cache) => {

acc.push(...cache.results);

return acc;

}, []);

const uniqueIds = [...new Set(allIds)];

syncedRealm

.objects(ListingsAndReview)

.filtered("_id in $0", uniqueIds)

.subscribe({ name: "listing" });

setCachedIds(uniqueIds);

}, [fullCache]);

useEffect(() => {

getDatabaseSize();

getCacheSize();

});By contrast, the PowerSync version has a single %%useEffect%% hook to manage the connection state based on %%offlineMode%% (as mentioned above, in the %%rn-mbnb%% example app, the user can simulate offline or online mode, and this is what the %%offlineMode%% state variable refers to). We use %%disconnect()%% for “offline mode” which closes the sync connection to the PowerSync Service instance, and %%connect()%% when going back online, which reopens it.

useEffect(() => {

offlineMode

? powersync.disconnect()

: powersync.connect(new BackendConnector());

}, [offlineMode]);PowerSync also provides a %%disconnectAndClear()%% function, which disconnects and clears the SQLite database. This is typically used when the user logs out.

Cache Management

There is a %%clearCache%% function in the Realm version which clears image and database caches:

// Clear the image cache and Realm databases

const clearCache = useCallback(async () => {

await FastImage.clearMemoryCache();

await FastImage.clearDiskCache();

// NOTE: If you are offline, the data will not clear until you go online

// Atlas will return a change set which will remove the data.

syncedRealm.subscriptions.update((mutableSubs) => {

mutableSubs.removeAll();

});

// WARNING: This will delete all data in the synced Realm database.

// Permissions should be set so the user cannot actually perform this.

// Atlas Device Sync will revert this change.

// This should only be necessary if offline.

if (offlineMode) {

syncedRealm.write(() => {

syncedRealm.deleteAll();

});

}

// Clear the local cache

localRealm.write(() => {

localRealm.deleteAll();

});

alert("Cache cleared!");

}, []);The PowerSync version does not implement any explicit cache management. The local SQLite database contains all of the data that the client app requires, and Sync Rules are used to determine what data should be persisted on the client device.

State Management

Related to its more advanced search and caching implementation, the Realm version has several state management variables such as %%resultIds%%, %%cachedIds%%, %%resultMethod%%, %%syncedDbSize%%, %%localDbSize%%, and %%cacheSize%%, in addition to %%offlineMode%% and %%searchTerm%%:

const [resultIds, setResultIds] = useState<string[]>([]);

const [offlineMode, setOfflineMode] = useState(false);

const [searchTerm, setSearchTerm] = useState("");

const [cachedIds, setCachedIds] = useState<string[]>([]);

const [resultMethod, setResultMethod] = useState<ResultMethod>(ResultMethod.None);The PowerSync version has fewer state management variables: %%offlineMode%%, %%searchTerm%%, %%inputValue%%, and %%records%% (the search query results):

const [offlineMode, setOfflineMode] = useState(false);

const [searchTerm, setSearchTerm] = useState("");

const [inputValue, setInputValue] = useState("");

const { data: records } = useQuery<ListingsAndReviewRecord>(`

SELECT * FROM ${LISTINGS_REVIEW_TABLE} WHERE name LIKE '%${searchTerm}%'

`);